Common contemporary OSs include Microsoft Windows, Mac OS X, and Linux. Microsoft Windows has a significant majority of market share in the desktop and notebook computer markets, while the server and embedded device markets are split amongst several OSs.

Linux

Linux (also known as GNU/Linux) is one of the most prominent examples of free software and open source development which means that typically all underlying source code can be freely modified, used, and redistributed by anyone. The name “Linux” comes from the Linux kernel, started in 1991 by Linus Torvalds. The system’s utilities and libraries usually come from the GNU operating system (which is why it is also known as GNU/Linux).

Linux is predominantly known for its use in servers. It is also used as an operating system for a wide variety of computer hardware, including desktop computers, supercomputers, video game systems, and embedded devices such as mobile phones and routers.

Design

Linux is a modular Unix-like OS. It derives much of its basic design from principles established in Unix during the 1970s and 1980s. Linux uses a monolithic kernel which handles process control, networking, and peripheral and file system access. The device drivers are integrated directly with the kernel. Much of Linux’s higher-level functionality is provided by seperate projects which interface with the kernel. The GNU userland is an important part of most Linux systems, providing the shell and Unix tools which carry out many basic OS tasks. On top of the kernel, these tools form a Linux system with a GUI that can be used, usually running in the X Windows System (X).

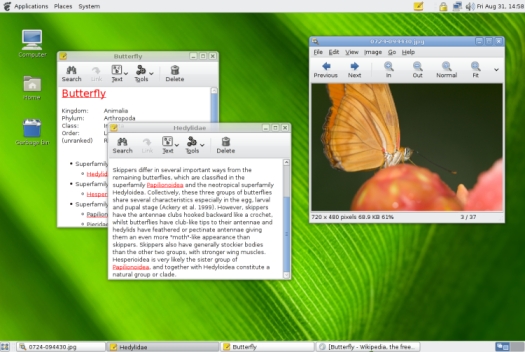

Linux can be controlled by one or more of a text-based command line interface (CLI), GUI, or through controls on the device itself (like on embedded machines). Desktop machines have 3 popular user interfaces (UIs): KDE, GNOME, and Xfce. These UIs run on top of X, which provides network transparency, enabling a graphical application running on one machine to be displayed and controlled from another (that’s like running a game on your computer but your friend’s computer can control and see the game from his computer). The window manager provides a means to control the placement and appearance of individual application windows, and interacts with the X window system.

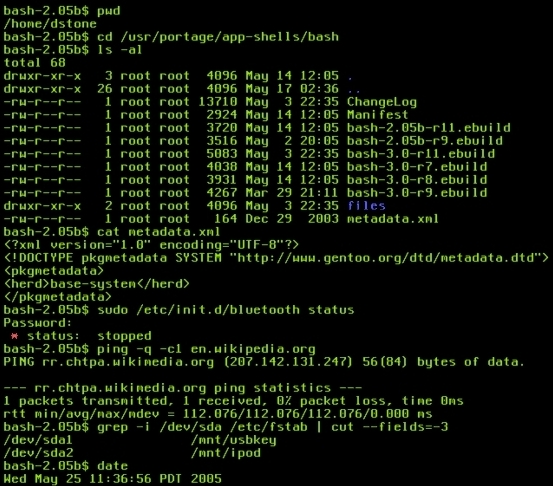

A Linux system usually provides a CLI of some sort through a shell. Linux distros for a server might only use a CLI and nothing else. Most low-level Linux components use the CLI exclusively. The CLI is particularly suited for automation of repetitive or delayed tasks, and provides very simple inter-process communication. A graphical terminal is often used to access the CLI from a Linux desktop.

Development

The primary difference between Linux and many other OSs is that the Linux kernel and other components are free and open source software. Free software projects, although developed in a collaborative fashion, are often produced independently of each other. A Linux distribution, commonly called a “distro”, is a project that manages a remote collection of Linux-based software, and facilitates installation of a Linux OS. Distros include system software and application software in the form of packages. A distribution is responsible for the default configuration of installed Linux systems, system security, and more generally integration of the different software packages into a coherent whole.

Linux is largely driven by its developer and user communities. Some vendors develop and fund their distros on a volunteer basis. Others maintain a community versionof their commercial distros. In many cities and regions, local associations known as Linux Users Groups (LUGs) promote Linux and free software. There are also many online communities that seek to provide support to Linux users and developers. Most distros also have IRC chatrooms or newsgroups for communication. Online forums are another means for support. Linux distros host mailing lists also.

Most Linux distros support dozens of programming languages. The most common collection of utilities for building both Linux applications and OS programs is found within the GNU toolchain, which includes the GNU Compiler Collection (GCC) and the GNU build system. GCC provieds compilers for Ada, C, C++, Java, and Fortran. Most distros also include support for Perl, Ruby, Python and other dynamic languages. The two main frameworks for developing graphical applications are those of GNOME and KDE.

Uses

As well as those designed for general purpose use on desktops and servers, distros may be specialized for different purposes including: computer architecture support, embedded systems, stability, security, localization to a specific region or language, targeting of specific user groups, support for real-time applications, or commitment to a given desktop environment. Linux runs on a more diverse range of computer architecture than any other OS.

Although there is a lack of Linux ports for some Mac OS X and Microsoft Windows programs in domains such as desktop publishing and professional audio, applications roughly equivalent to those available for OS X and Windows are available for Linux. Most Linux distros have some sort of program for browsing through a list of free software applications that have already been tested and configured for the specific distro. There are many free software titles popular on Windows that are available for Linux the same way there are a growing amount of proprietary software that is being supported for Linux.

Historically, Linux has been used as a server OS and been very successful in that area due to its relative stability and long uptime. Linux is the cornerstone of the LAMP server-software combination (Linux, Apache, MySQL, Perl/PHP/Python) which has achieved popularity among developers, and which is one of the more common platforms for website hosting.

Windows

Windows (created by Microsoft) is the most dominant OS on the market today. The two most popular versions of Windows for the desktop are XP and Vista (Vista being the latest version). There is also a mobile version of Windows as well as a server version of Windows (the latest being Windows Server 2008). Windows is all proprietary, closed-source which is much different than Linux licenses. Most of the popular manufacturers make all of their hardware compatible with Windows which makes Windows operate and almost all kinds of new hardware.

XP

The term “XP” stands for experience. Windows XP is the successor to both Windows 2000 Professional and Windows ME. Within XP there are 2 main editions: Home and Professional. The Professional version has additional features and is targeted at power users and business clients. There is also a Media Center version that has additional multimedia features enhancing the ability to record and watch TV shows, view DVD movies, and listen to music.

Windows XP features a task-based GUI. XP analyzes the performance impact of visual effects and uses this to determine whether to enable them, so as to prevent the new functionaility from consuming excessive additional processing overhead. The different themes are controlled by the user changing their preferences.

Windows XP has released a set of service packs (currently there are 3) which fix problems and add features. Each service pack is a superset of all previous service packs and patches so that only the latest service pack needs to be installed, and also includes new revisions. Support for Windows XP Service Pack 2 will end on July 13, 2010 (6 years after its general ability).

Vista

Windows Vista contains many changes and new features from XP, including an update GUI and visual style, improved searching features, new multimedia creation tools, and redesigned networking, audio, print, and display sub-systems. Vista also aims to increase the level of communication between machines on a home network, using peer-to-peer technology to simplify sharing files and digital media between computers and devices.

Windows vista is intended to be a technology-based release, to provide a base to include advanced technologies, any of which are related to how the system functions and thus not readily visible to the user. An example is the complete restructuring of the architecture of the audio, print, display, and networking subsystems; while the results of this work are visible to software developers, end-users will only see what appear to be evolutionary changes in the UI.

Vista includes technologies which employ fast flash memory to improve system performance by chaching commonly used programs and data. Other new technology utilizes machine learning techniques to analyze usage patterns to allow Windows Vista to make intelligent decisions about what content should be present in system meomry at any given time. As a part of the redesign of the networking architecture, IPv6 has been fully incorporated into the OS and a number of performance improvements have been introduced, such as TCP window scaling. For graphics, it has a new Windows Display Driver Model and a major revision to Direct3D. At the core of the OS, many improvements have been made to the memory manager, process scheduler and I/O scheduler.

Security

Windows is the most vulnerable OS to attacks. Security software is a must when you’re using Windows which is much different then Linux and OS X. It has been criticized for its susceptibility to malware, viruses, trojan horses, and worms. Security issues are compounded by the fact that users of the Home edition, by default, receive an administrator account that provides unrestricted access to the underpinnings of the system. If the administrator’s account is broken into, there is no limit to the control that can be asserted over the compromised PC.

Windows has historically been a tempting target for virus creators because of its world market dominance. Security holes are often invisible until they are exploited, making preemptive action difficult. Microsoft has stated that the release of patches to fix security holes is often what causes the spread of exploits against those very same holes, as crackers figured out what problems the patches fixed, and then launch attacks against unpatched systems. It is recommended to have automatic updates turned on to prevent a system from being attacked by an unpatched bug.

OS X

OS X is the major operating system that is created by Apple Inc. Unlike its predecessor (referred to Classic or OS 9), OS X is a UNIX based operating system. Currently OS X is in version 10.5, with 10.5.3 being the last major software update and plans for 10.6 having been announced. Apple has chosen to name each version of OS X after a large cat with 10.0 being Cheetah, 10.1 as Puma, 10.2 as Jaguar, 10.3 as Panther, 10.4 as Tiger, 10.5 as Leopard, and the unreleased 10.6 named Snow Leopard.

Apple also develops a server OS X that is very similar to the normal OS X, but is designed to work on Apple’s X-Serve hardware. Some of the tools included with the server OS X are workgroup management and administration software that provide simplified access to common network services, including a mail transfer agent, a Samba server, an LDAP server, a domain name server, a graphical interface for distributed computing (which Apple calls Xgrid Admin), and others.

Description

OS X is a UNIX based OS built on top of the XNU kernel, with standard Unix facilities available from the CLI. Apple has layered a number of components over this base, including their own GUI. The most notable features of their GUI are the Dock and the Finder.